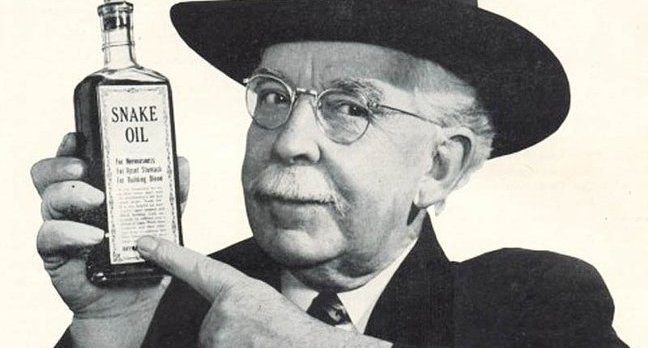

The Rocket AI hoax, which purportedly showed that investors should not buy into AI “hype”, was proudly promoted here.

The problem here is that neither Anders Sandberg nor Riva-Melissa Tez can tell if an AI startup’s tech is novel. That is why investors have due diligence. If you are an investor, I suggest that you hire a competent (read: at least PhD and a successful track record of publications, with some innovation) machine learning researcher to help you tell them apart instead of dodgy philosophers. You will have to pay around $500 per hour for such expert opinions. Riva’s comments about deep learning and reinforcement learning, well, those were statements by the likes of Juergen and myself, we are aware when certain techniques were invented — unlike some of the popularizers and the keen plagiarizers she may have met at NIPS. Since we mentioned how this older research was recycled ourselves, we (being AGI theorists) said that long before she said it, and it is only because we clarified this on the public forums that people have the slightest idea that this is the case. I myself told everyone that AIXI is just applying Solomonoff Induction to Sutton’s Reinforcement Learning model where we replace his linear model with a universal predictor. Likewise, Q-learning goes back to 1989. A lot of such research has precedents. That is why we have this thing called citation, although some researchers apparently do not know how to cite prior research. We have not yet fully demonstrated human-level intelligence, therefore there is work to be done.

Her observation about deep learning and reinforcement learning is fair. However, when she insinuates, without really having a technical understanding of the field, that there are no truly new innovations hastily generalizing from those two examples, then that is simply science denial. Not all researchers are equivalent, and they do not work in the same ways. It is not AI “hype” that is the obstacle here, the problem is that Silicon Valley is full of scammers — perhaps more than half of Valley startups are scammy or gimmicky in some thinly-veiled manner, and they are exploiting this trend, which is backed by all too real scientific results, as well. If an investor is so naive that he will believe anything he hears, he probably deserves to lose money. More than half of all Valley startups are scams or half-scams, that is not quite news. And in AI, it is even easier to be fooled, because, an inexpert, cannot tell much about any important matter in a field that is as advanced as quantum physics. It is not an almost-trivial Computer Science subject like database systems, even LeCun might hold wrong ideas. That is precisely how Hum.AI, or those lame chatbot startups with ELIZA knock off tech can fool investors, journalists, and the general public. I myself told many people about how scammy Hum.AI was the moment it came out. In the same essay, I also pointed out that MIRI/FHI community itself, which includes Sandberg, is a similar, in fact the largest transhumanist scam — as they earn their living from AI scaremongering. Their community employs a large group of people, they even hired stupid internet trolls to discourage their online critics, as Scientology has in the past. FHI is a fraud, they are conning people with nonsensical, superstitious, badly-crafted, derivative science-fiction/horror stories — they poorly imitate The Terminator, and The Matrix mostly in their dull writing. I bet that a lot of people could fool most investors with something obsolete like an algorithm out of Sutton’s 1998 RL textbook or some old Msc. thesis recycled. That only proves how error prone the methodology of those investors are. But if you claim that some of the most innovative researchers in human-level AI conference are not making real progress, then I will have to suggest that you might be wrong. It is quite elementary. Their hoax may have been successful, but the conclusion they are drawing is almost entirely wrong. You may keep funding startups with “special data”. That is not where the most value lies. That thinking is from maybe early 2000’s, when I worked mostly on data mining and simpler machine learning methods like SVM’s. However, even by 2000-2004, there were researchers like me who were working on human-level AI. We did make some progress in about 15 years, a good deal of it indeed. And these have been proven by experiments and backed by advanced mathematical theory. I have no idea why Permutation Ventures are emphasizing this as if it is generally true:

Investors aren’t involved enough in the space to know that the first Reinforcement Learning textbook was written in 1998, or that what separates a successful applied machine learning company is often the novelty, quality and/or quantity of the data they have access to. Clever teams are exploiting the obscurity and cachet to raise more money, knowing that investors and the press have little understanding of how machine learning works in practice.

Machine learning theory was first developed in 50’s by Ray Solomonoff, that does not make it obsolete, either. Rome was not built in a day. It is still ongoing research.

I wonder if they realize that actual AI tech startups like Schmidhuber’s startup have moved far beyond “deep learning” many years ago. I hope they do not regret it when they find out that their investments have been left in the dust, or that their misleading analysis has caused other investors to lose money. I believe they will fully understand what happened in about 2 years. For now, let me only say that exciting things will happen, rapidly, and that it is not about “big data”.

My suggestion to considerate readers: be smart, be skeptical, do not yield to ignorance.

Dr. Eray Özkural